CodewCaro.

Why is RAG important when looking at generative AI?

Artificial intelligence is evolving fast, staying ahead with the latest updates presents a significant technical challenge, especially for generative AI models. The algorithms, fundamental in creating new content, requires computational power and also time for their updates. This requirement isn't just a logistical issue; it represents a formidable challenge.

Interestingly, some generative AI models are being designed to accommodate incremental updates. This approach could be a game-changer, offering a streamlined solution to the complex problem of keeping these models current. Incremental updates that instead of overhauling the entire model, smaller, more manageable modifications can be made regularly. This method not only saves on resources but also ensures that the LLM remains relevant and efficient.

Generative AI models, despite their advanced capabilities, sometimes struggle with data gaps. This limitation can lead to the generation of inaccurate or entirely fabricated content, also called hallucinations. This pose significant concerns about the reliability and trustworthiness of outputs produced by these models. The implications are far-reaching, especially considering the growing reliance on AI for information and content generation.

How can AI be governed in an agile fashion?

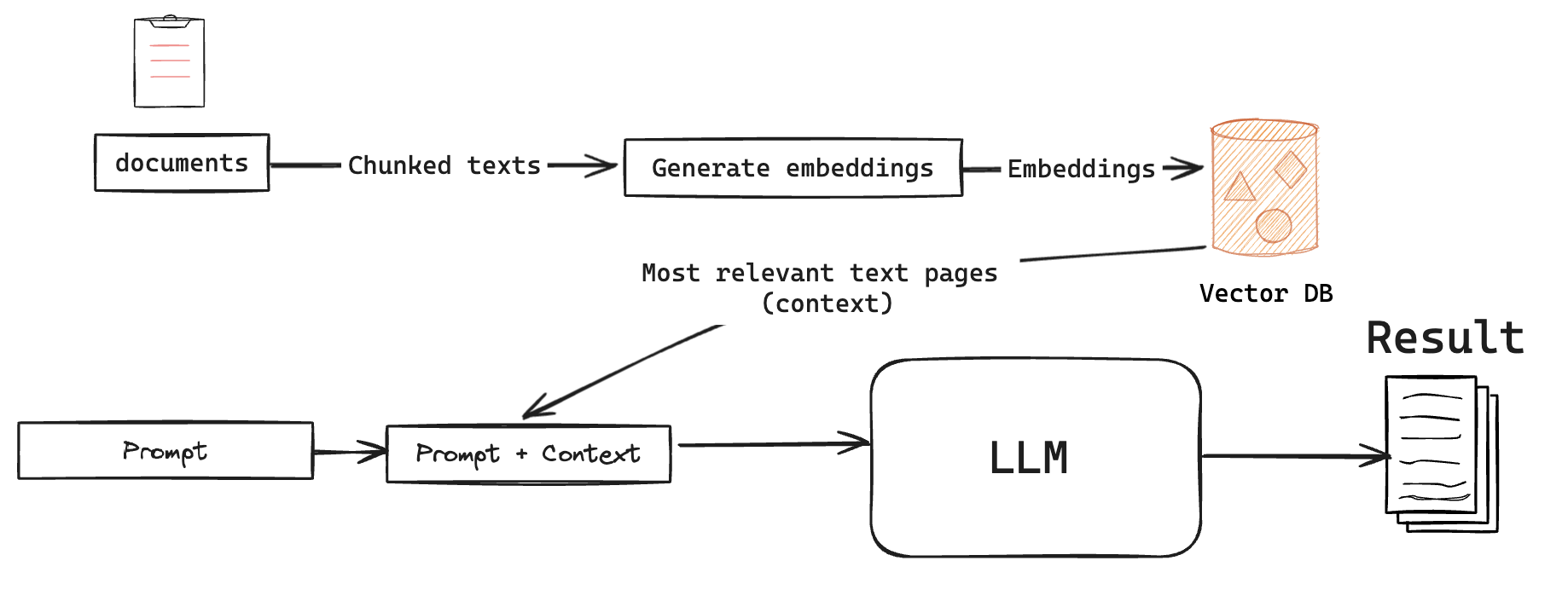

Retrieval-Augmented Generation (RAG), creates a way for us to to optimize the output in a contexted way. RAG integrates a retrieval system where the LLM first searches the database for relevant context. In this way the prompt gets filled with context after the context has been filled.

RAG is beneficial in many use cases. For example, on an enterprise level, prompting LLMs together with contexts would make significant better responses. Also, it is a way to train the model "frontend". Since Amazon Bedrock does not train on the data we can also be sure of that confidential data does not get used in purposes we do not agree with.

It takes a long time for general LLMs to become good at what the users asks for. If we can find a way to adjust it without having to know machine learning and adjusting parameters we should use it. The combination of RAG and Generative AI holds immense potential for fostering innovation across various fields.

RAG combined with LLMs

RAG combined with LLMs